DBSeeder - Relational Database Data Generator.

1. Introduction

DBSeeder allows the flexible generation of large amounts of anonymised random dummy data for selected relational database systems (RDBMS) - useful e.g. for stress testing.

The database schema underlying the data generation can be freely defined.

The names of the database, the schema and the user can be freely chosen, unless the respective database management system contains restrictions.

If the selected database, schema or user already exist, they are deleted with all including data.

DBSeeder then creates the selected database, schema or user and generates the desired dummy data.

A maximum of 2 147 483 647 rows can be generated per database table.

The database schema to be used, that is, the required database tables can be user defined using a JSON file.

Details can be found here: Database Schema.

Currently, depending on the capabilities of the specific RDBMS, the following functionalities and data types are supported:

- constraints

- foreign (referential) key

- not null constraint

- primary key

- unique (alternate) key

- data types

- BIGINT - large integer

- BLOB - large binary object

- CLOB - large character Object

- TIMESTAMP - timestamp including date

- VARCHAR - variable text

The database systems considered meet the following conditions:

- The database system is freely available in a documented docker image for testing purposes.

- The database system provides a well documented JDBC interface.

- A complete documentation of the SQL commands is available.

1.1 RDBMS Overview

| RDBMS | Ticker Symbol(s) | RDBMS Versions | Latest JDBC |

|---|---|---|---|

| AgensGraph | agens | v2.1.1 - v2.13.0 | 1.4.2-c1 |

| Apache Derby | derby, derby_emb | 10.16.1.1 | 10.16.1.1 |

| CockroachDB | cockroach | v20.2.5 - v22.1.12 | see PostgreSQL |

| CrateDB | cratedb | 4.1.6 - 5.1.12 | 2.6.0 |

| CUBRID | cubrid | 10.2 - 11.2 | 11.1.0.0028 |

| Exasol | exasol | 6.2.8-d1 - 7.1.16 | 7.1.16 |

| Firebird | firebird | 3.0.5 - v4.0.2 | 4.0.8.java11 |

| H2 Database Engine | h2, h2_emb | 1.4.200 - 2.1.214 | 2.1.214 |

| HeavyDB | heavy | v5.6.1 - v6.2.0 | 6.1.0 |

| HSQLDB | hsqldb, hsqldb_emb | 2.5.1 - 2.7.1 | 2.7.1 |

| IBM Db2 Database | ibmdb2 | 11.5.1.0 - 11.5.8.0 | 11.5.8.0 |

| IBM Informix | informix | 14.10 FC3DE - | 4.50.9 |

| 14.10.FC7W1DE | |||

| MariaDB Server | mariadb | 10.4.13 - 10.10.2 | 3.1.0 |

| Mimer SQL | mimer | v11.0.3c - v11.0.5a | 3.42.3 |

| MonetDB | monetdb | Jun2020-SP1 - Sep2022-SP1 | 3.2.jre8 |

| MySQL Database | mysql | 8.0.20 - 8.0.31 | 8.0.31 |

| Oracle Database | oracle | 12.1.0.2 - 21.3.0 | 21.8.0.0 |

| Percona Server for MySQL | percona | 8.0.28-20 - 8.0.30-22 | see MySQL |

| PostgreSQL | postgresql | 12.3 - 15.1-alpine | 42.5.1 |

| SQL Server | sqlserver | 2019-CU12-ubuntu-20.04 - | 11.2.2.jre18 |

| 2022-latest | |||

| SQLite | sqlite | 3.40.0.0 | |

| TimescaleDB | timescale | 2.3.1-pg13 - 2.9.0-pg14 | see PostgreSQL |

| trino | mysql_trino, | 339 - 403 | 403 |

| oracle_trino, | |||

| postgresql_trino, | |||

| sqlserver_trino | |||

| VoltDB | voltdb | 9.2.1 | 11.4.2 |

| YugabyteDB | yugabyte | 2.2.2.0-b15 - | see PostgreSQL |

| 2.17.0.0-b24 |

1.2 RDBMS Directory

The following database systems are included in the current version of DBSeeder:

- AgensGraph

- client only version

- commercial, open source

- derived from PostgreSQL

- property graph model and relational model

- Apache Derby

- client and embedded version

- open source

- relational model

- CockroachDB

- client only version

- commercial, open source

- compatible with PostgreSQL JDBC

- relational model

- CrateDB

- client only version

- commercial, open source

- compatible with PostgreSQL

- relational model

- CUBRID

- client only version

- compatible with MySQL

- open source

- relational model

- Exasol

- client only version

- commercial

- in-memory, column-oriented, relational model

- Firebird

- client and embedded (not supported here) version

- open source

- relational model

- H2 Database Engine

- client and embedded version

- compatible with HSQLDB, PostgreSQL

- open source

- relational model

- HeavyDB

- client only version

- commercial, open source

- GPU and CPU version

- relational model

- HSQLDB

- client and embedded version

- open source

- relational model

- IBM Db2 Database

- client only version

- commercial

- relational model

- IBM Informix

- client only version

- commercial

- relational model

- MariaDB Server

- client only version

- open source

- derived from MySQL

- relational model

- Mimer SQL

- client only version

- commercial

- relational model

- MonetDB

- client only version

- open source

- column-oriented relational model

- MySQL Database

- client only version

- open source

- relational model

- Oracle Database

- client only version

- commercial

- relational model

- Percona Server for MySQL

- client only version

- commercial, open source

- derived from MySQL

- relational model

- PostgreSQL

- client only version

- open source

- relational model

- SQL Server

- client only version

- commercial

- derived from Adaptive Server Enterprise

- relational model

- SQLite

- commercial, open source

- embedded only version

- relational model

- TimescaleDB

- client only version

- commercial, open source

- derived from PostgreSQL

- relational model

- trino

- compatible with Accumulo, Cassandra, Elasticsearch, Hive, Kudu, MongoDB, MySQL, Pinot, PostgreSQL, Redis, Redshift

- distributed query engine

- open source

For the RDBMS MySQL, Oracle, PostgreSQL and SQL Server the JDBC driver from trino can optionally be used instead of the original JDBC driver. The prerequisite for this is that trino is either installed locally (Linux) or is available as a Docker container (Linux and Windows). Details can be found here: trino.

- VoltDB

- client only version

- commercial, open source

- derived from H-Store, HSQLDB

- in-memory relational model

- YugabyteDB

- client only version

- commercial, open source

- compatible with Cassandra, PostgreSQL, Redis

- derived from PostgreSQL, RocksDB

- inspired by Cloud Spanner

- relational model

2. Data

2.1 Database Schema

The underlying database schema is defined in a JSON-based parameter file and the associated program code is generated and compiled with the script scripts/run_db_seeder_generate_schema.

To validate the database schema in the JSON parameter file, the JSON schema file db_seeder_schema.schema.json in the directory src/main/resources is used.

2.1.1 Structure of the Database Schema Definition File

The definition of a database schema consists of the object global with the global parameters and the array tables, which contains the definition of the database tables.

2.1.1.1 globals - Global Parameters

defaultNumberOfRows- default value for the number of table rows to be generated, if no value is specified in the table definitionencodingISO_8859_1- a string with Western Latin characters is inserted into generated character columnsencodingUTF_8- a string with simplified Chinese characters is inserted into generated character columns specified in the table definitionnullFactor- determines the proportion of NULL values in optional columns and must be between 2 and 99 (inclusive): 2 means 50%, 4 means 25%, 10 means 10%, etc., default value is 4

2.1.1.2 tables - Database Table Definitions

tableName- database table namenumberOfRows- number of table rows to be generatedcolumns- an array of column definitionscolumnName- column namedataType- data type, is one of BIGINT, BLOB, CLOB, TIMESTAMP or VARCHARsize- for data type VARCHAR the maximum size of the column valueprecision- currently not usednotNull- is a NULL value allowed ?primaryKey- is this the primary key column ?references- an array of foreign key definitionsreferenceTable- name of the reference database tablereferenceColumn- name of the reference column

defaultValueInteger- default value for integer columnsdefaultValueString- default value for alphanumeric columnslowerRangeInteger- lower limit for an integer column, requires also an upper limitlowerRangeString- lower limit for an alphanumeric column, requires also an upper limitupperRangeInteger- upper limit for an integer columnupperRangeString- upper limit for an alphanumeric columnvalidValuesInteger- valid values for an integer columnvalidValuesString- valid values for an alphanumeric column

tableConstraints- an array of table constraint definitionsconstraintType- constraint type, is one of FOREIGN, PRIMARY or UNIQUEcolumns- an arry with the names of the affected columnsreferenceTable- name of the reference database table, only for foreign keysreferenceColumns- an arry with the names of the affected reference columns, only for foreign keys

Only either a range restriction (lowerRange..., upperRange...) or a value restriction (validValues...) may be specified for each column.

2.1.2 Mapping of Data Types in the JDBC Driver

| Data Type | JDBC Method |

|---|---|

BIGINT |

setLong |

BLOB |

setBytes |

CLOB |

setString |

TIMESTAMP |

setTimestamp |

VARCHAR |

setNString (Firebird, MariaDB, MS SQL SERVER and Oracle) |

setString (else) |

2.1.3 Example File db_seeder_schema.company_9...9.json in the Directory resources/json

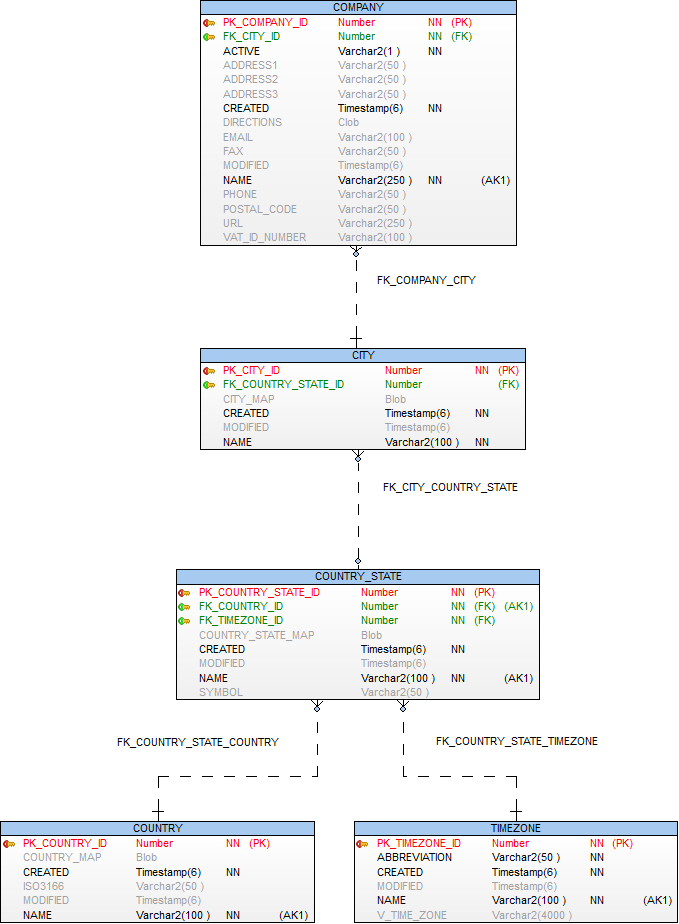

This file contains the definition of a simple database schema consisting of the database tables CITY, COMPANY, COUNTRY, COUNTRY_STATE and TIMEZONE.

The abbreviations in the following illustration (created with Toad Data Modeler) mean:

- (AK1) - alternate key (unique key)

- FK - foreign key

- NN - not null

- PK - primary key

2.2 Construction of the Dummy Data Content

The proportion of NULL values in optional columns is defined by the global parameter nullFactor.

All methods for generating column contents can be overwritten if necessary.

2.2.1 BIGINT

Java method: getContentBigint

- If the column parameter

validValuesIntegeris defined in the database schema, a random value is taken from it. - If the column parameters

lowerRangeIntegerandupperRangeIntegerare defined in the database schema, a random value is taken from this interval. - Otherwise the counter for the current row (row number) is used.

2.2.2 BLOB

Java method: getContentBlob

- The content of the file

blob.pngfrom the resource directory (src/main/resources) is loaded into these columns.This file contains the company logo of Konnexions GmBH.

2.2.3 CLOB

Java method: getContentClob

- The content of the file

clob.mdfrom the resource directory (src/main/resources) is loaded into these columns. This file contains the text of the Konnexions Public License (KX-PL).

2.2.4 TIMESTAMP

Java method: getContentTimestamp

- A randomly generated timestamp is assigned to all columns that can contain temporal data.

2.2.5 VARCHAR

Java method: getContentVarchar

- If the column parameter

validValuesStringis defined in the database schema, a random value is taken from it. - If the column parameters

lowerRangeStringandupperRangeStringare defined in the database schema, a random value is taken from this interval. - Otherwise content of the column is constructed depending on the row number and the encoding flags as follows:

- ASCII (all rows where the index modulo 3 is 0):

- column name in capital letters

- underscore

_ - current row number left-justified

- ISO 8859 1 (all rows where the index modulo 3 is 1) :

- column name in capital letters

- underscore

_ - a string containing specific Western European characters with accent (e.g. French, Portuguese or Spanish)

- underscore

_ - current row number left-justified

- the ISO 8859 1 version can be prevented by choosing

encodingISO_8859_1falsein the database schema definition - UTF-8 (all rows where the index modulo 3 is 2):

- column name in capital letters

- underscore

_ - a string containing simplified Chinese characters

- underscore

_ - current row number left-justified

- the UTF-8 version can be prevented by choosing

encodingUTF_8falsein the database schema definition - If the resulting value exceeds the permissible column size, the value is shortened accordingly from the left

- ASCII (all rows where the index modulo 3 is 0):

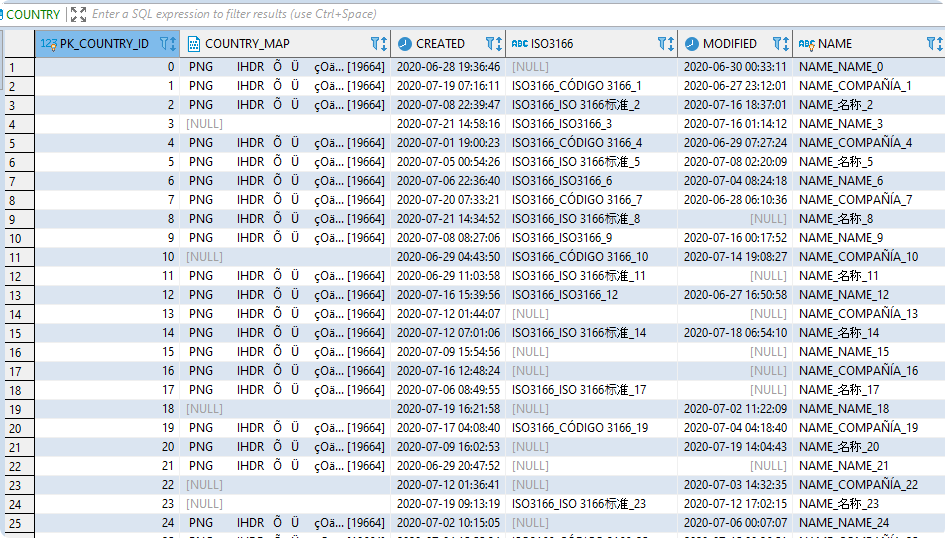

2.2.6 Examples

1. Table CITY

2. Table COUNTRY

3. Table TIMEZONE

3. Installation

The easiest way is to download a current release of DBSeeder from the GitHub repository.

You can find the necessary link here.

To download the repository Git is needed and for compilation the Gradle Build Tool and the open-source JDK are needed.

For changes to the DBSeeder repository it is best to use an editor (e.g. Vim) or an IDE (e.g. Eclipse IDE).

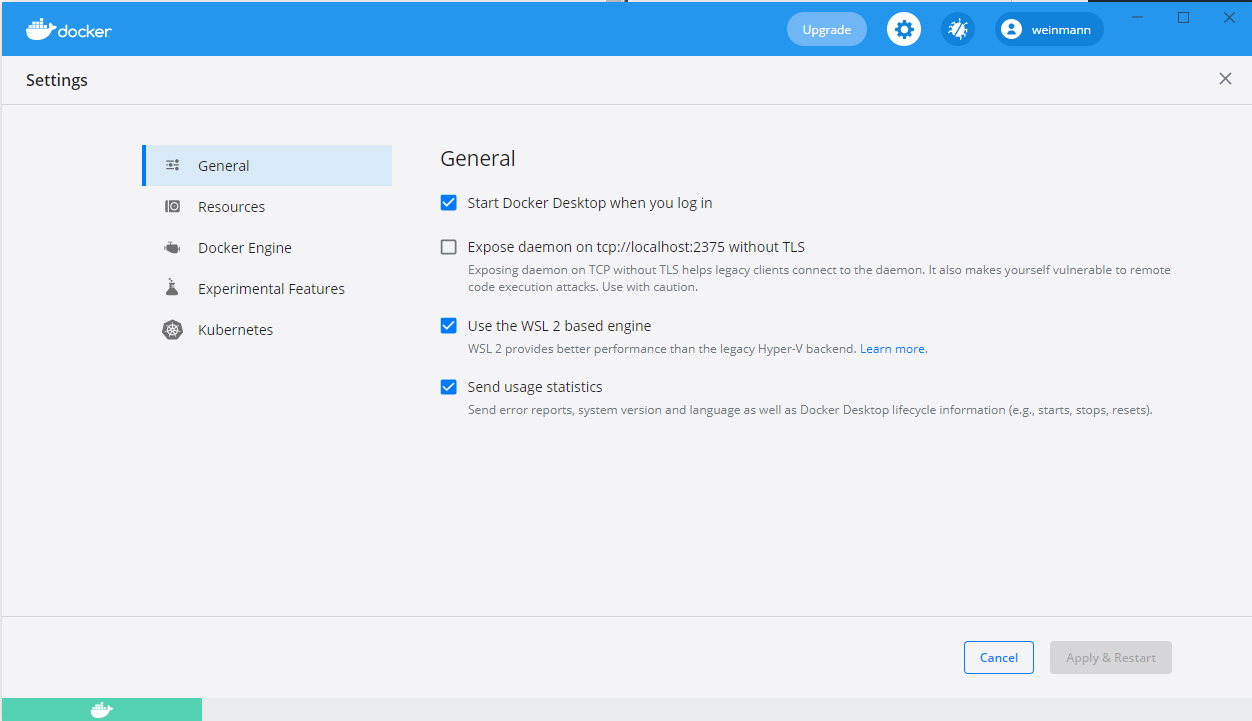

For using the Docker Image based databases in operational mode, Docker Desktop must also be installed.

For the respective software versions, please consult the document release notes.

4. Operating Instructions

4.1 Script run_db_seeder

Using the DBSeeder development and operational Docker image from Docker Hub (see here) eliminates the need to install the runtime environment.

With the script run_db_seeder the complete functionality of the DBSeeder application can be used:

- Creating a suitable database

- Generation of any number of dummy data.

All scripts are available in a Windows version (cmd / .bat) as well as in a Unix version (bash / .sh).

To run the scripts, apart from the prerequisites as release notes (ReleaseNotes.md),

only the libraries in the lib directory and the corresponding script of run_db_seeder are required.

The creation of the databases also requires a working access to Docker Hub.

All control parameters used in DBSeeder (see section 4.3) can be adapted in the scripts to specific needs.

The run_db_seeder script is controlled by the following script parameters::

DB_SEEDER_DBMS: the ticker symbol of the desired database management system (default valuesqlite) orcompletefor all implemented RDBMS.DB_SEEDER_SETUP_DBMS: should an empty database be created:yes: a new database is created based on a suitable Docker image- otherwise: no database is created

DB_SEEDER_NO_CREATE_RUNS: Number of dummy data generation runs:- 1: one run

- 2: two runs

- otherwise: no run

For the run variants complete, complete_client, complete_emb and complete_trino, statistics files with the following data name structure are created in the file directory resources/statistics by default:

db_seeder_<bash|cmd>_<run variant>_unknown_<DBSeeder release>_<vmware|win10|wsl2>.<csv|tsv>

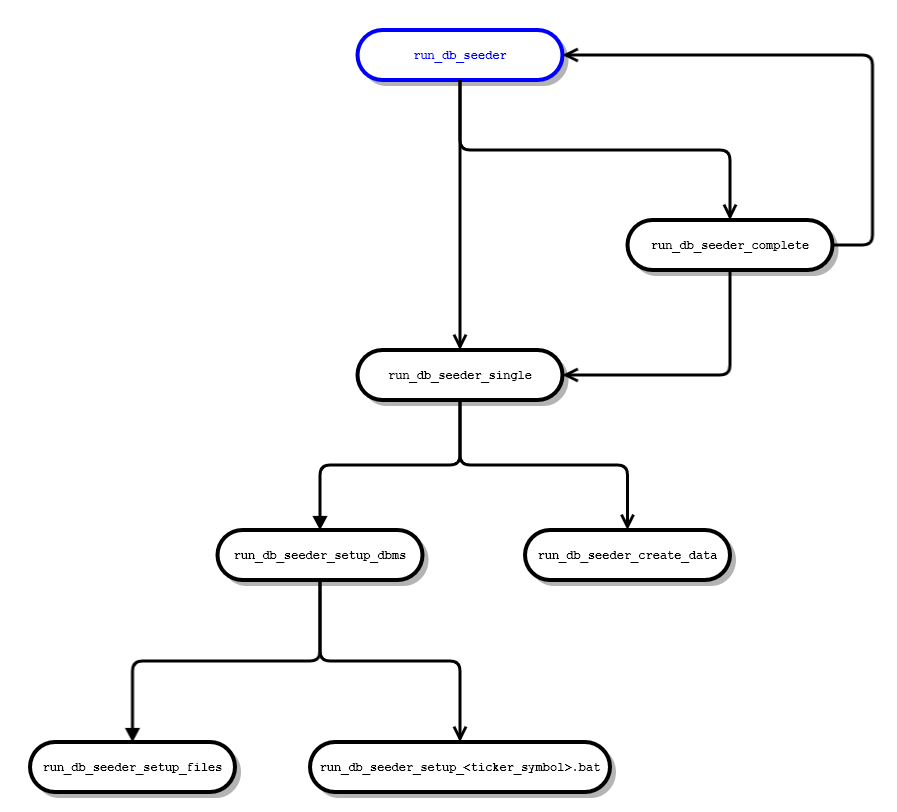

An overview of the structure of the scripts used can be taken from the following diagram:

4.2 Operation Possibilities

DBSeeder is tested under Ubuntu and Microsoft Windows.

In addition, tests are always performed in Windows with Ubuntu under the Windows Subsystem for Linux (WSL).

Besides one of the two operating systems, these are the minimum requirements for running DBSeeder:

Details on the required software versions can be found in the release notes.

Special Features for the Operation with Ubuntu

-

A suitable image is available on Docker Hub for development and operation, see here.

-

In the directory

scripts/3.0.6are the two scriptsrun_install_4_vm_wsl2_1.shandrun_install_4_vm_wsl2_1.shwith which an Ubuntu environment can be prepared for development and operation. -

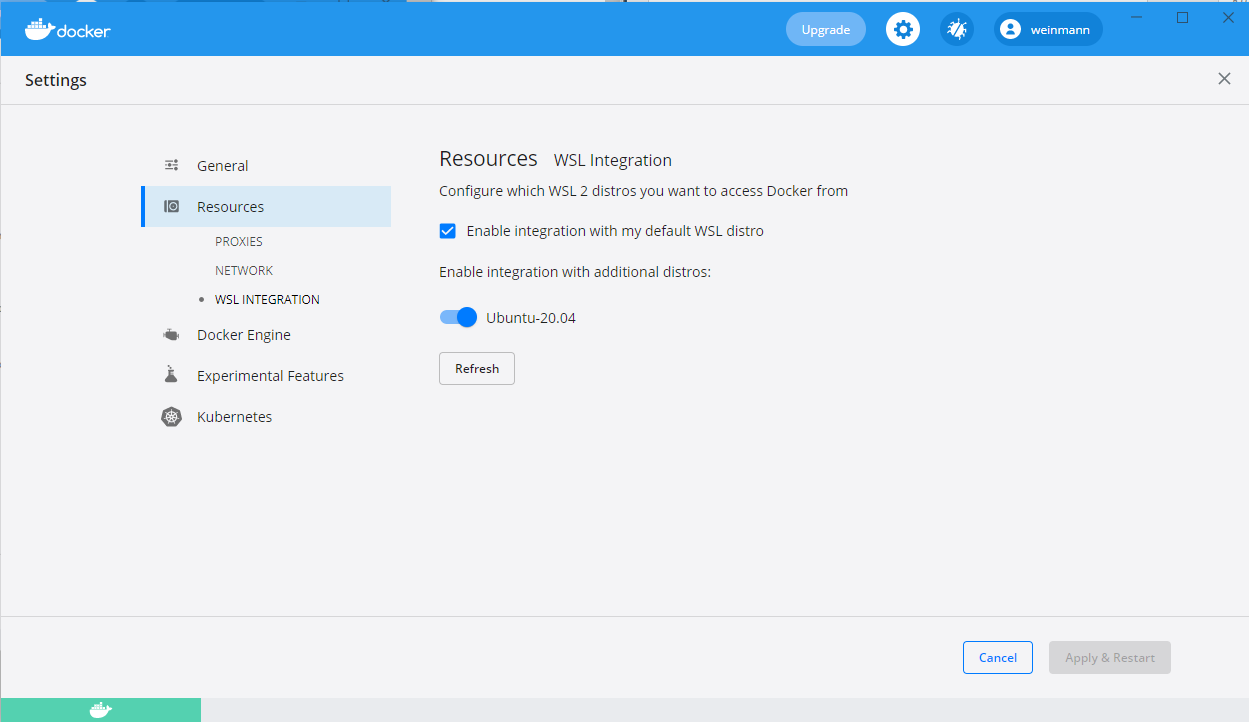

If the Windows Subsystem for Linux (WSL) is to be used, then the

WSL INTEGRATIONfor Ubuntu must be activated in Docker

4.3 Control Parameters

4.3.1 Supported Parameters

The flow control parameters for DBSeeder are stored in the properties file src/main/resources/db_seeder.properties and can all be overridden by the environment variables defined in the scripts.

The following control parameters are currently supported:

db_seeder.batch.size=0

db_seeder.character.set.server=

db_seeder.collation.server=

db_seeder.connection.host=

db_seeder.connection.host.trino=

db_seeder.connection.port=0

db_seeder.connection.port.trino=0

db_seeder.connection.prefix=

db_seeder.connection.service=

db_seeder.connection.suffix=

db_seeder.database.sys=

db_seeder.database=

db_seeder.drop.constraints=

db_seeder.file.configuration.name=yes

db_seeder.file.improvement.header=DBMS;Type;ms;Constraints;Improvement

db_seeder.file.improvement.name=

db_seeder.file.json.name=resources/json/db_seeder_schema.company_5400.json

db_seeder.file.statistics.delimiter=\t

db_seeder.file.statistics.header=ticker symbol;DBMS;db type;total ms;start time;end time;host name;no. cores;operating system;total DDL ms;drop constr. ms;add constr. ms;total DML ms;constraints

db_seeder.file.statistics.name=resources/statistics/db_seeder_statistics.tsv

db_seeder.file.summary.name=

db_seeder.file.summary.source=resources/statistics

db_seeder.password.sys=

db_seeder.password=

db_seeder.schema=

db_seeder.user.sys=

db_seeder.user=

4.3.2 Explanation and Cross-reference

| Property incl. Default Value [db.seeder.] | Environment Variable [DB_SEEDER_] | Used By | Description |

|---|---|---|---|

| batch.size=<9...9> | BATCH_SIZE | all RDBMS except | number of insert operations for the bulk operation, default value 0 (a single bulk operation for each database table) |

| character.set.server= |

CHARACTER_SET_SERVER | mariadb | default server character set |

| collation.server= |

COLLATION_SERVER | mariadb | default server collation |

| connection.host= |

CONNECTION_HOST | all client RDBMS | host name or ip address of the database server |

| connection.host_trino= |

CONNECTION_HOST_TRINO | trino | host name or ip address of the trino |

| connection.port=<9...9> | CONNECTION_PORT | all client RDBMS | port number of the database server |

| connection.port_trino=<9...9> | CONNECTION_PORT_TRINO | trino | port number of the trino |

| connection.prefix= |

CONNECTION_PREFIX | all RDBMS | prefix of the database connection string |

| connection.service= |

CONNECTION_SERVICE | oracle | service name of the database connection string |

| connection.suffix= |

CONNECTION_SUFFIX | firebird, hsqldb, mysql, percona, voltdb | suffix of the database connection string |

| database.sys= |

DATABASE_SYS | agens, cockroach, heavy, informix, mariadb, mimer, monetdb, mysql, percona, | privileged database name |

| postgresql, sqlserver, timescale, yugabyte | |||

| database= |

DATABASE | all RDBMS except cratedb, exasol, monetdb, oracle, voltdb | database name |

| drop.constraints= |

DROP_CONSTRAINTS | all RDBMS except cockroach, cratedb, h2, heavy, sqlite, trino | drop all contraints before the DML operations and recreate them afterwards |

| file.configuration.name= |

FILE_CONFIGURATION_NAME | n/a | directory and file name of the DBSeeder configuration file |

| file.improvement.header= |

FILE_IMPROVEMENT_HEADER | all RDBMS | header line of the improvement file created in run_db_seeder_compute_improvement |

| file.improvement.name= |

FILE_IMPROVEMENT_NAME | all RDBMS | directory and file name of the DBSeeder improvement file created in run_db_seeder_compute_improvement |

| file.json.name= |

FILE_JSON_NAME | scripts/run_db_seeder_generate_schema | directory and file name of the JSON file containing the database schema |

| file.statistics.delimiter= |

FILE_STATISTICS_DELIMITER | all RDBMS | separator of the statistics file created in run_db_seeder |

| file.statistics.header= |

FILE_STATISTICS_HEADER | all RDBMS | header line of the statistics file created in run_db_seeder |

| file.statistics.name= |

FILE_STATISTICS_NAME | all RDBMS | file name of the statistics file created in run_db_seeder |

| file.summary.name= |

FILE_SUMMARY_NAME | all RDBMS | file name of the summary statistics file created in run_db_seeder_create_summary |

| file.summary.source= |

FILE_SUMMARY_SOURCE | all RDBMS | directory name(s) (separated by semicolon) of the source directories containing statistics files |

| password.sys= |

PASSWORD_SYS | agens, exasol, firebird, heavy, ibmdb2, informix, mariadb, mimer, monetdb, mysql, | password of the privileged user |

| oracle, percona, postgresql, sqlserver, timescale | |||

| password= |

PASSWORD | all RDBMS except cockroach, derby, ibmdb2, informix | password of the normal user |

| schema=kxn_schema | SCHEMA | agens, derby, exasol, h2, hsqldb, ibmdb2, monetdb, postgresql, sqlserver, | schema name |

| timescale, yugabyte | |||

| user.sys= |

USER_SYS | all RDBMS except derby, voltdb | name of the privileged user |

| user=kxn_user | USER | all RDBMS except derby, ibmdb2, informix | name of the normal user |

4.4 Statistics

Each new release is completed with the creation of 7 statistics files in the file directory resources/statistics.

The data contained in these files show the DDL and DML performance of the individual databases under identical conditions:

- Operating systems: Ubuntu with VMware Workstation Player, Ubuntu with WSL (Windoiws Subsystem for Linux) on Windows and Windows.

..._vmware.tsv: Ubuntu with VMware Workstation Player on Windows...._win10.tsv: Windows 10....._wsl2.tsv: Ubuntu LTS with Windows Subsystem for Linux 2 on Windows

- DDL: Creation of the database schema consisting of the 5 relational tables CITY, COMPANY, COUNTRY, COUNTRY_STATE and TIMEZONE (see JSON file:

resources/json/db_seeder_schema.company_5400.json). - DML: Insert records into these database tables - CITY 1800, COMPANY 5400, COUNTRY 200, COUNTRY_STATE 600 and TIMEZONE 11.

- If possible, two runs are made for each database system: one run with constraints enabled and one run with constraints disabled - see column

constraints:active: constraints are enabledactive - no choice: constraints are enabled and disabling is not possibleinactive: constraints are disabled

The creation of these statistics files is managed by the following control parameters (see here):

db_seeder.file.improvement.header=DBMS;Type;ms;Constraints;Improvement

db_seeder.file.improvement.name=

db_seeder.file.statistics.delimiter=\t

db_seeder.file.statistics.header=ticker symbol;DBMS;db type;total ms;start time;end time;host name;no. cores;operating system;total DDL ms;drop constr. ms;add constr. ms;total DML ms;constraints

db_seeder.file.statistics.name=resources/statistics/db_seeder_statistics.tsv

db_seeder.file.summary.name=

db_seeder.file.summary.source=resources/statistics

4.4.1 Detailed statistical data

File name syntax: db_seeder_<bash|cmd>_complete_<company|syntax>_<DBSeeder version>_<vmware|wsl2|win10>.<csv|tsv>

Explanation for the columns:

ticker symbol- internal abbreviation used for the databaseDBMS- official DBMS namedb type- client version, embedded version or via trinototal ms- total time of DDL and DML operations in millisecondsstart time- date and time when the database operations were startedend time- date and time when the database operations were completedhost name- name of the computer connected to a computer networkno. cores- number of CPU cores usedoperating systemtotal DDL ms- total time of DDL operations in millisecondsdrop constr. ms- total time to drop all constraintsadd constr. ms- total time to add the previously dropped constraintstotal DML ms- total time of DML operations in millisecondsconstraints- DML operations with enabled (active) or disabled (inactive) constraints (foreign, primary and unique key)

4.4.2 Performance data regarding constraints

File name syntax: db_seeder_<bash|cmd>_improvement_<company|syntax>_<DBSeeder version>_<vmware|wsl2|win10>.<csv|tsv>

Explanation for the columns:

DBMS- official DBMS nameType- client version, embedded version or via trinoms- total time of DDL and DML operations in millisecondsConstraints- DML operations with enabled (active) or disabled (inactive) constraints (foreign, primary and unique key)Improvment- improvement of total time if constraints are inactive

4.4.3 Historical statistical data

File name syntax: db_seeder_summary_<first DBSeeder version>-<current DBSeeder version>.<csv|tsv>

Explanation for the columns:

ticker symbol- internal abbreviation used for the databaseDBMS- official DBMS nameversion- DBSeeder versioncreator- shell environment:bashorcmddb type- client version, embedded version or via trinoconstraints- DML operations with enabled (activeandactive - no choice) or disabled (inactive) constraints (foreign, primary and unique key)schema- identification term for the scheme definition used:companyorsyntaxtotal ms- total time of DDL and DML operations in millisecondsstart time- date and time when the database operations were startedend time- date and time when the database operations were completedhost name- name of the computer connected to a computer networkno. cores- number of CPU cores usedoperating systemfile name- name of the file with the source datatotal DDL ms- total time of DDL operations in millisecondsdrop constr. ms- total time to drop all constraintsadd constr. ms- total time to add the previously dropped constraintstotal DML ms- total time of DML operations in milliseconds

5. RDBMS Specific Technical Details

DBeaver is a great tool to analyze the database content.

In the file directory resources/dbeaver you will also find a file exported from DBeaver with the connection parameters currently used in DBSeeder.

5.1 AgensGraph

- data types:

DBSeeder Type |

AgensGraph Database Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BYTEA |

| CLOB | TEXT |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE: see PostgreSQL

- CREATE SCHEMA: see PostgreSQL

- CREATE TABLE: see PostgreSQL

- CREATE USER: see PostgreSQL

-

Docker image (latest):

- pull command:

docker pull bitnine/agensgraph:v2.13.0 - DockerHub

- pull command:

-

encoding: see PostgreSQL

-

issue tracking: GitHub

-

JDBC driver (latest):

- version 1.4.2-c1

- Maven repository

-

source code: GitHub

5.2 Apache Derby

- data types:

DBSeeder Type |

Apache Derby Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA

- CREATE TABLE

- CREATE USER - n/a

-

Docker image (latest - only client version):

- pull command:

docker pull konnexionsgmbh/apache_derby:10.16.1.1 - DockerHub

- pull command:

-

encoding: by using the following JVM parameter:

-Dderby.ui.codeset=UTF8 -

issue tracking: Jira

-

JDBC driver (latest):

- client version: Maven repository

- embedded version: Maven repository

-

source code: Apache Derby

-

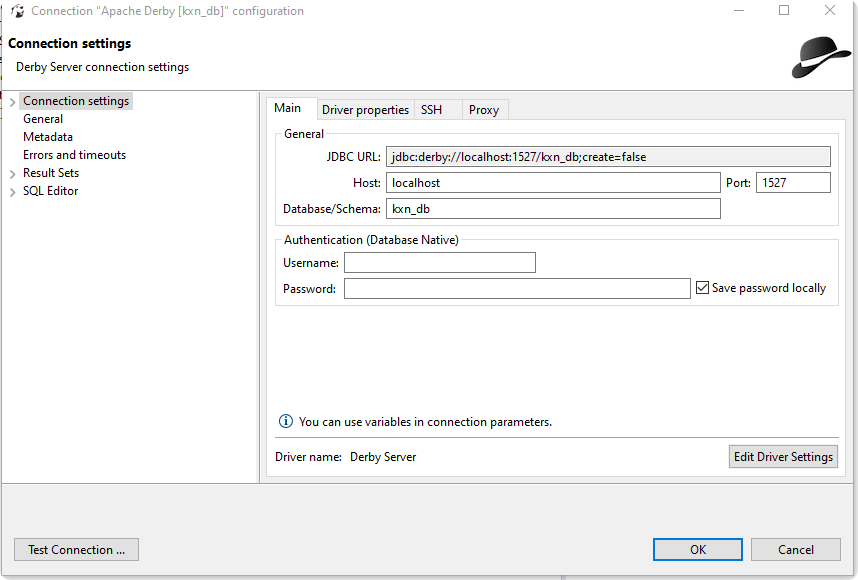

DBeaver database connection settings:

-- client version:

5.3 CockroachDB

- data types:

DBSeeder Type |

CockroachDB Type |

|---|---|

| BIGINT | INT |

| BLOB | BYTES |

| CLOB | STRING |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | STRING |

-

DDL syntax:

-

Docker image (latest):

- pull command:

docker pull cockroachdb/cockroach:latest - DockerHub

- pull command:

-

encoding: by default

utf8encoding -

issue tracking: GitHub

-

JDBC driver (latest):

- same as PostgreSQL

-

privileged database access: user

root -

source code: GitHub

-

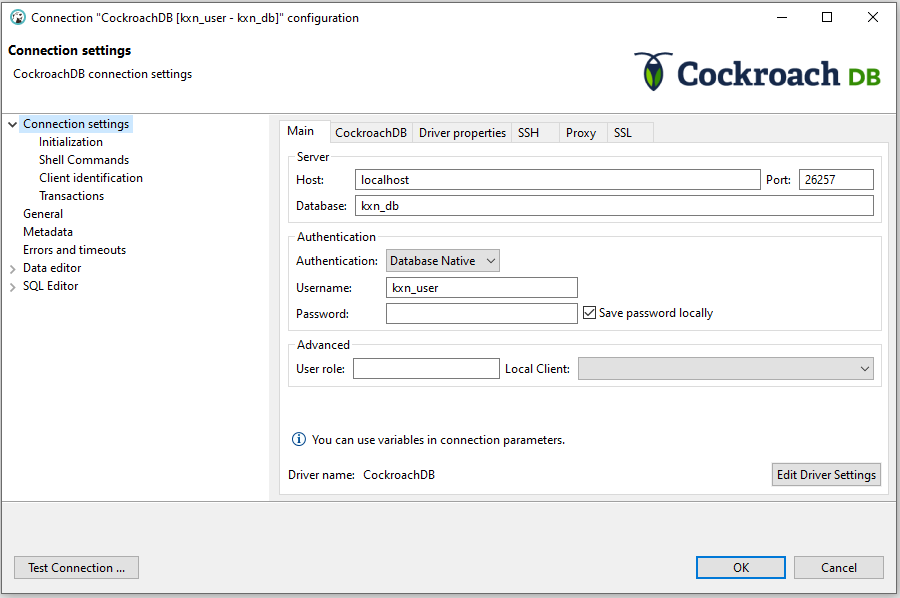

DBeaver database connection settings:

5.4 CrateDB

- data types:

DBSeeder Type |

CrateDB Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | OBJECT |

| CLOB | TEXT |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | TEXT |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command: `docker pull crate:latest

- DockerHub

-

encoding: by default

utf8encoding -

issue tracking: GitHub

-

JDBC driver (latest):

-

privileged database access: user

crate -

restrictions:

- no constraints (e.g. foreign keys or unique keys)

- no transaction concept

- no triggers

- only a very proprietary BLOB implementation

-

source code: GitHub

-

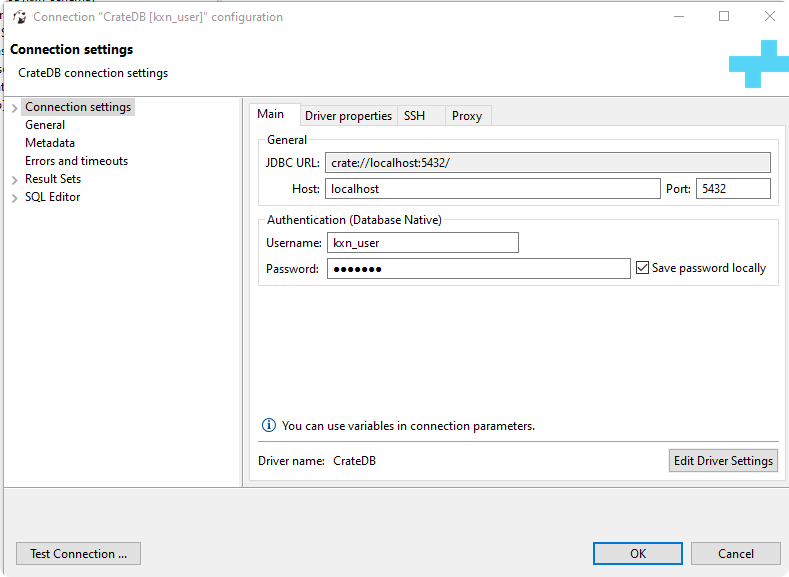

DBeaver database connection settings:

5.5 CUBRID

- data types:

DBSeeder Type |

CUBRID Type |

|---|---|

| BIGINT | INT |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull cubrid/cubrid:11.0 - DockerHub

- pull command:

-

encoding: by specifying after the database name when database is created:

kxn_db de_DE.utf8 -

issue tracking:

-

JDBC driver (latest):

-

privileged database access: users

DBAandPUBLIC -

restrictions: no full UTF-8 support

-

source code: GitHub

-

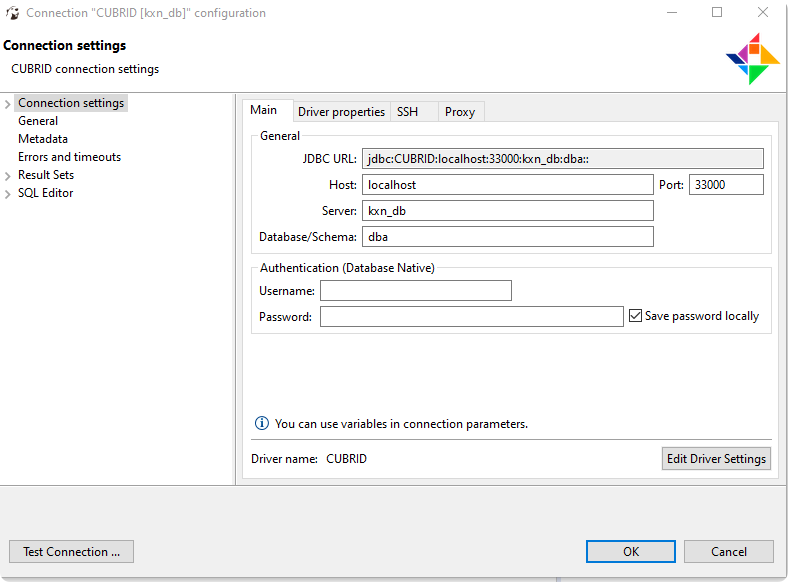

DBeaver database connection settings:

5.6 Exasol

- data types:

DBSeeder Type |

Exasol Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | VARCHAR(2000000) |

| CLOB | VARCHAR(2000000) |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull exasol/docker-db:latest - DockerHub

- pull command:

-

JDBC driver (latest):

-

privileged database access: user

syspasswordexasol -

restrictions:

- no unique key constraints

-

DBeaver database connection settings:

5.7 Firebird

- data types:

DBSeeder Type |

Firebird Type |

|---|---|

| BIGINT | INTEGER |

| BLOB | BLOB |

| CLOB | BLOB SUB_TYPE 1 |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull jacobalberty/firebird:v4.0.2 - DockerHub

- pull command:

-

encoding: by using the following JDBC URL parameter:

encoding=UTF8 -

issue tracking: GitHub

-

JDBC driver (latest):

-

privileged database access: user

SYSDBA -

source code: GitHub

-

DBeaver database connection settings:

5.8 H2 Database Engine

- data types:

DBSeeder Type |

H2 Database Engine Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull konnexionsgmbh/h2_database_engine:2.1.214 - DockerHub

- pull command:

-

encoding: H2 internally uses Unicode, and supports all character encoding systems and character sets supported by the virtual machine you use.

-

issue tracking: GitHub

-

JDBC driver (latest):

-

privileged database access: user

sa -

source code: GitHub

-

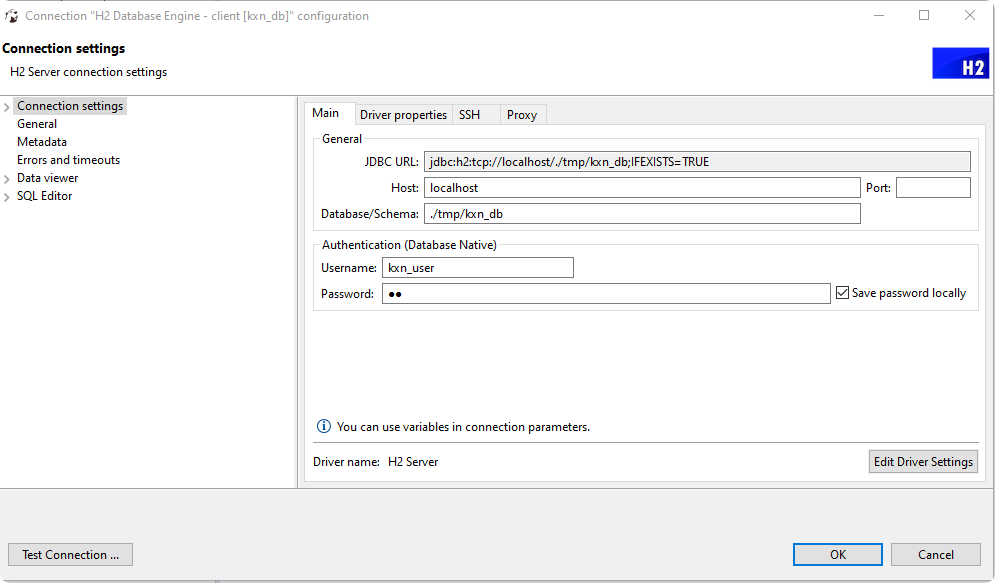

DBeaver database connection settings:

-- client version:

5.9 HeavyDB

- data types:

DBSeeder Type |

HeavyDB Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | TEXT ENCODING NONE |

| CLOB | TEXT ENCODING NONE |

| TIMESTAMP | TIMESTAMP(0) |

| VARCHAR | TEXT ENCODING NONE |

- DDL syntax:

- CREATE DATABASE

- CREATE SCHEMA - n/a

- CREATE TABLE

-

Docker image (latest):

- pull command:

docker pull omnisci/core-os-cpu -

encoding: no special configuration should be needed

-

issue tracking: GitHub

-

JDBC driver (latest):

-

privileged database access:

- database:

omnisci -

user:

admin -

restrictions:

- column and table names case sensitive

- max. column length 32767 bytes

- no binary columns

- no constraints, e.g. unique keys

- no foreign / referential keys

- no primary key

-

no triggerss

-

source code: GitHub

-

DBeaver database connection settings:

5.10 HSQLDB

- data types:

DBSeeder Type |

HSQLDB Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull konnexionsgmbh/hypersql_database:latest - DockerHub

- pull command:

-

encoding: by using the following system property

sqlfile.charset=UTF-8. -

issue tracking: SourceForge

-

JDBC driver (latest):

-

privileged database access: user

SA -

source code: SourceForge

-

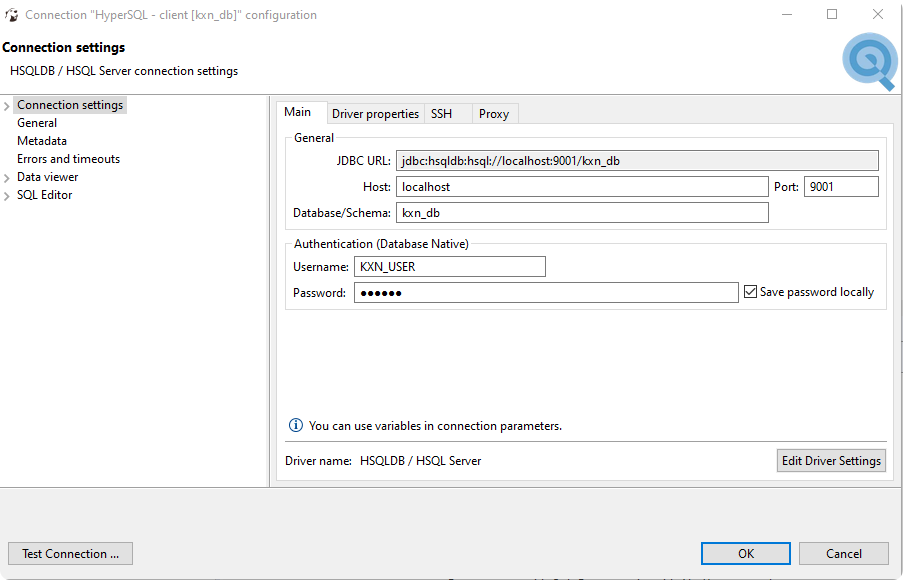

DBeaver database connection settings:

-- client version:

5.11 IBM Db2 Database

- data types:

DBSeeder Type |

IBM Db2 Database Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

-

Docker image (latest):

- pull command:

docker pull ibmcom/db2:11.5.8.0 - DockerHub

- pull command:

-

encoding:

- by using the CCSID clause in the CREATE statements for any of the following objects:

- Database

- Table space

- Table

- procedure or function

- by using the CCSID clause in the CREATE statements for any of the following objects:

-

JDBC driver (latest):

-

privileged database access: user

db2inst1 -

restrictions: the IBM Db2 DBMS only accepts operating system accounts as database users

-

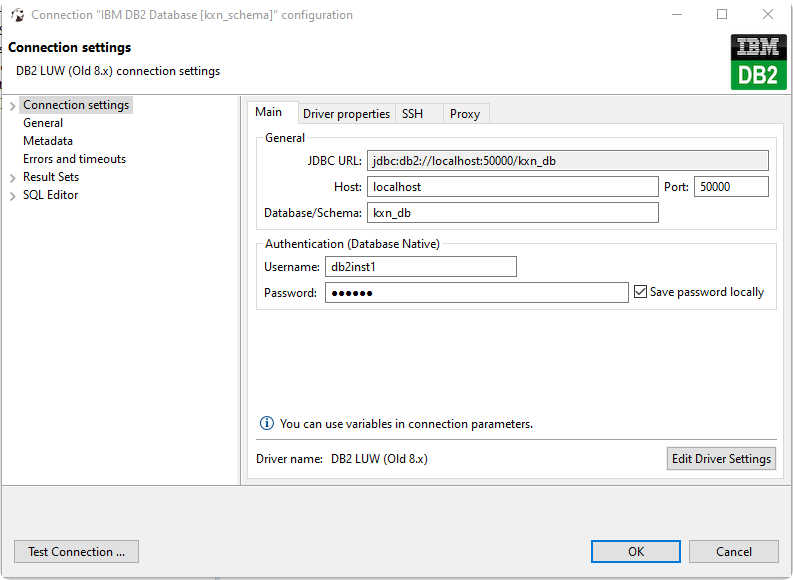

DBeaver database connection settings:

5.12 IBM Informix

- data types:

DBSeeder Type |

IBM Informix Database Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | DATETIME YEAR TO FRACTION |

| VARCHAR | VARCHAR (1-254) / LVARCHAR |

-

DDL syntax:

- CREATE DATABASE

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull ibmcom/informix-developer-database:14.10.FC7W1DE - DockerHub

- pull command:

-

encoding:

- code-set conversion value is extracted from the DB_LOCALE value specified at the time the connection is made

-

JDBC driver (latest):

-

privileged database access:

- user

informix - password

in4mix - database / schema

sysmaster - INFORMIXSERVER

informix

- user

-

restrictions:

- the IBM Informix DBMS only accepts operating system accounts or users mapped to operating system accounts as database users

- no named constraints in ALTER TABLE ADD CONSTRAINT

-

DBeaver database connection settings:

5.13 MariaDB Server

- data types:

DBSeeder Type |

MariaDB Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | LONGBLOB |

| CLOB | LONGTEXT |

| TIMESTAMP | DATETIME |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull mariadb:latest - DockerHub

- pull command:

-

encoding:

- server level:

SET character_set_server = 'latin2'; - database level:

CHARACTER SET = 'keybcs2' - table level:

CHARACTER SET 'utf8' - column level:

CHARACTER SET 'greek'

- server level:

-

issue tracking: Jira

-

JDBC driver (latest):

-

privileged database access:

- user:

mysql - password;

root

- user:

-

source code: GitHub

-

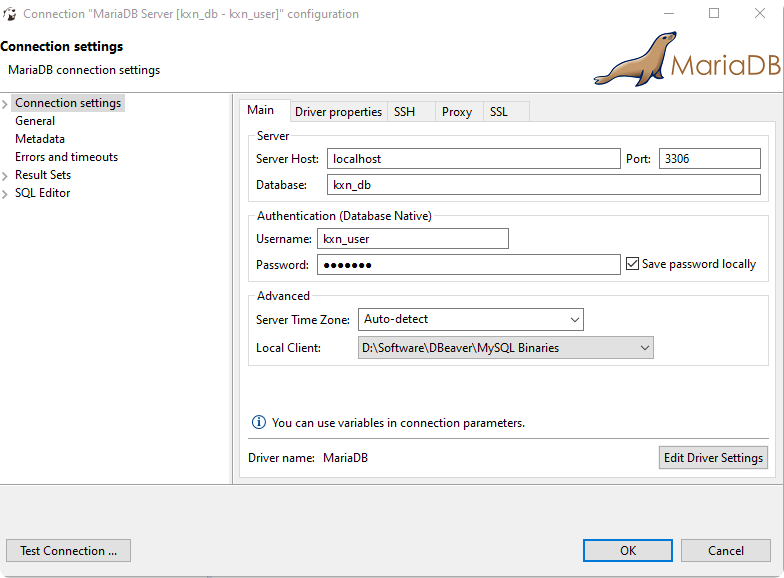

DBeaver database connection settings:

5.14 Mimer SQL

- data types:

DBSeeder Type |

MimerSQL Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | NVARCHAR |

-

DDL syntax:

- CREATE DATABASE

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull mimersql/mimersql_v11.0.5a - DockerHub

- pull command:

-

encoding: NCHAR, NVARCHAR

-

JDBC driver (latest):

-

privileged database access:

- database;

mimerdb - user:

SYSADM

- database;

-

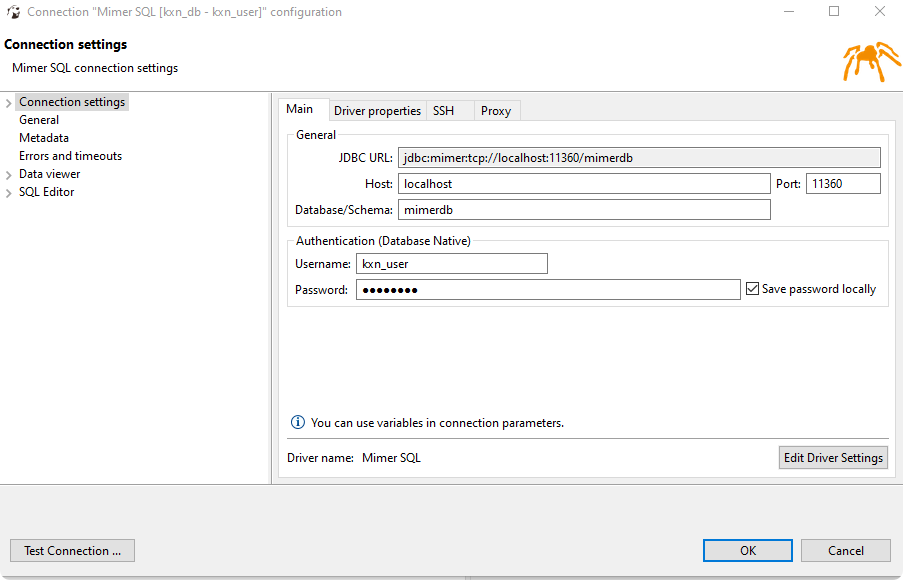

DBeaver database connection settings:

5.15 MonetDB

- data types:

DBSeeder Type |

MonetDB Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull monetdb/monetdb:Sep2022-SP1 - DockerHub

- pull command:

-

encoding: no special configuration should be needed

-

issue tracking: GitHub

-

JDBC driver (latest):

-

privileged database access:

- database:

demo - user:

monetdb - password:

monetdb

- database:

-

source code: GitHub

-

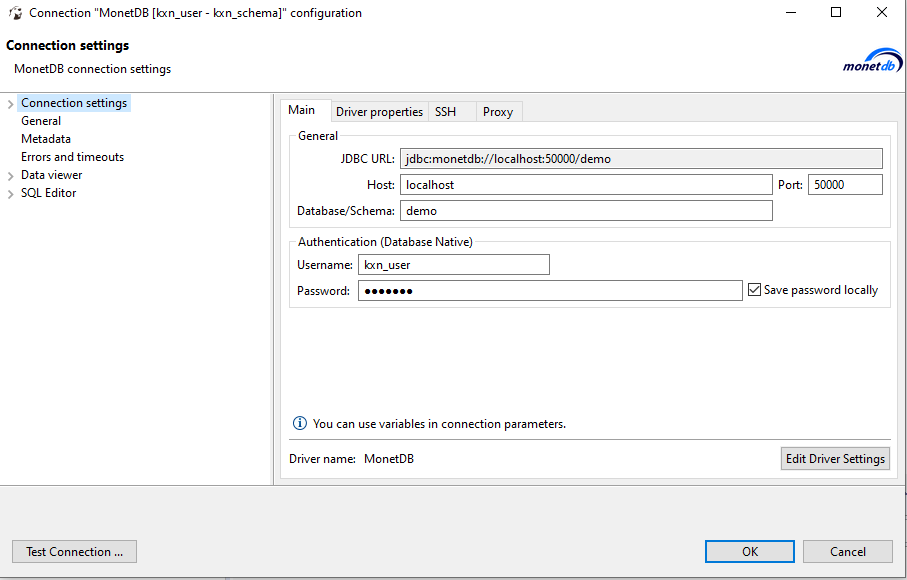

DBeaver database connection settings:

5.16 MySQL Database

- data types:

DBSeeder Type |

MySQL Database Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | LONGBLOB |

| CLOB | LONGTEXT |

| TIMESTAMP | DATETIME |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER

-

Docker image (latest):

- pull command:

docker pull mysql:8.0.31 - DockerHub

- pull command:

-

encoding: for applications that store data using the default MySQL character set and collation (utf8mb4, utf8mb4_0900_ai_ci), no special configuration should be needed

-

JDBC driver (latest):

-

privileged database access:

- database:

sys - user:

root

- database:

-

source code: GitHub

-

DBeaver database connection settings:

5.17 Oracle Database

- data types:

DBSeeder Type |

Oracle Database Type |

|---|---|

| BIGINT | NUMBER |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR2 |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER

-

Docker image: DockerHub

-

encoding: since Oracle Database 12c Release 2 the default database character set used is the Unicode character set AL32UTF8

-

JDBC driver (latest):

-

privileged database access:

- database:

orclpdb1 - user:

SYS AS SYSDBA

- database:

-

DBeaver database connection settings:

5.18 Percona Server for MySQL

- data types:

DBSeeder Type |

Percona Sercver Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | LONGBLOB |

| CLOB | LONGTEXT |

| TIMESTAMP | DATETIME |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE: see MySQL Database

- CREATE SCHEMA - n/a

- CREATE TABLE: see MySQL Database

- CREATE USER: see MySQL Database

-

Docker image (latest):

- pull command:

docker pull percona/percona-server:latest - DockerHub

- pull command:

-

encoding: for applications that store data using the default MySQL character set and collation (utf8mb4, utf8mb4_0900_ai_ci), no special configuration should be needed

-

issue tracking: Jira

-

JDBC driver (latest):

- same as MySQL

-

privileged database access:

- database:

sys - user:

root

- database:

-

source code: GitHub

-

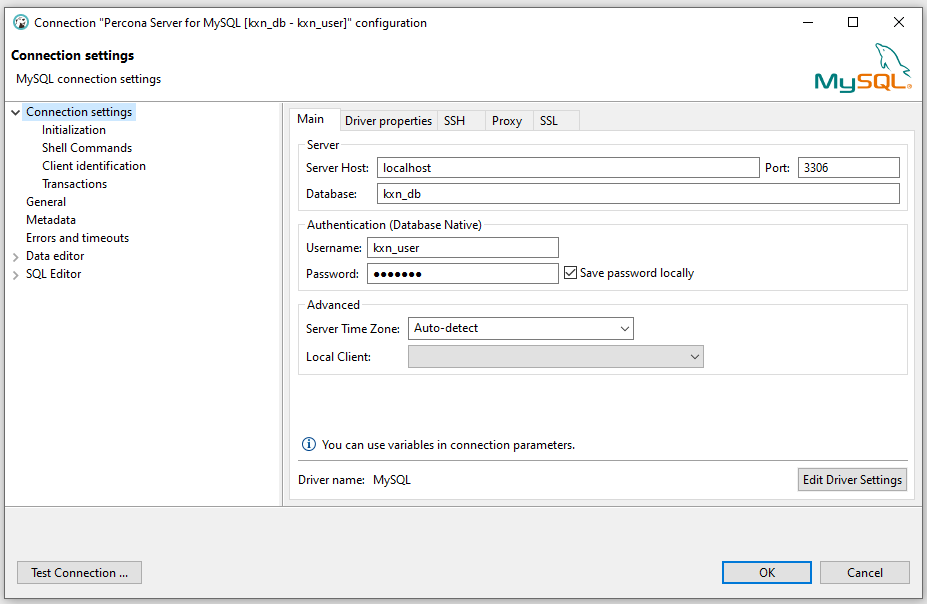

DBeaver database connection settings:

5.19 PostgreSQL

- data types:

DBSeeder Type |

PostgreSQL Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BYTEA |

| CLOB | TEXT |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

-

Docker image (latest):

- pull command:

docker pull postgres:15.1-alpine - DockerHub

- pull command:

-

encoding: when creating the database:

CREATE DATABASE testdb WITH ENCODING 'EUC_KR' ... -

issue tracking: PostgreSQL

-

JDBC driver (latest):

-

documentation: The PostgreSQL JDBC Interface

-

release notes: History of Changes

-

source code: GitHub

-

DBeaver database connection settings:

5.20 SQL Server

- data types:

DBSeeder Type |

SQL Server Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | VARBINARY (MAX) |

| CLOB | VARCHAR (MAX) |

| TIMESTAMP | DATETIME2 |

| VARCHAR | VARCHAR |

-

DDL syntax:

-

Docker image (latest):

- pull command:

docker pull mcr.microsoft.com/mssql/server:2022-latest - DockerHub

- pull command:

-

encoding: to use the UTF-8 collations that are available in SQL Server 2019 (15.x), you must select UTF-8 encoding-enabled collations (_UTF8)

-

JDBC driver (latest):

-

privileged database access:

- database:

master - user:

sa

- database:

-

restrictions: no full UTF-8 support in the given Docker images

-

DBeaver database connection settings:

5.21 SQLite

- data types:

DBSeeder Type |

SQLite Type |

|---|---|

| BIGINT | INTEGER |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | DATETIME |

| VARCHAR | VARCHAR2 |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER - n/a

-

encoding: by using the following parameter:

PRAGMA encoding='UTF-8'; -

issue tracking: SQLite

-

JDBC driver (latest):

- Maven repository

- determines also the DBMS version

-

restrictions:

- no Docker image necessary, hence not available

- no user management

-

source code: SQLite

-

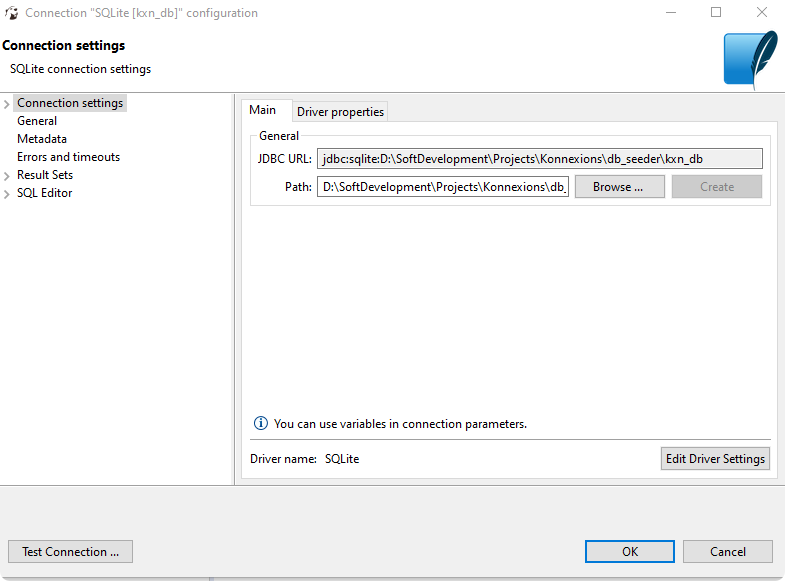

DBeaver database connection settings:

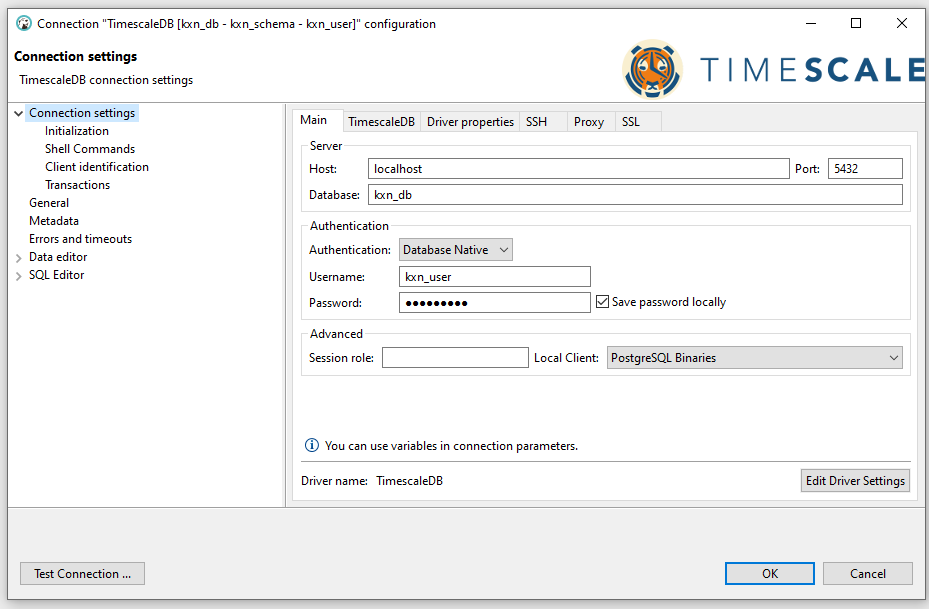

5.22 TimescaleDB

- data types:

DBSeeder Type |

AgensGraph Database Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BYTEA |

| CLOB | TEXT |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE: see PostgreSQL

- CREATE SCHEMA: see PostgreSQL

- CREATE TABLE: see PostgreSQL

- CREATE USER: see PostgreSQL

-

Docker image (latest):

- pull command:

docker pull timescale/timescaledb:latest - DockerHub

- pull command:

-

encoding: see PostgreSQL

-

issue tracking: GitHub

-

JDBC driver (latest):

- same as PostgreSQL

-

source code: GitHub

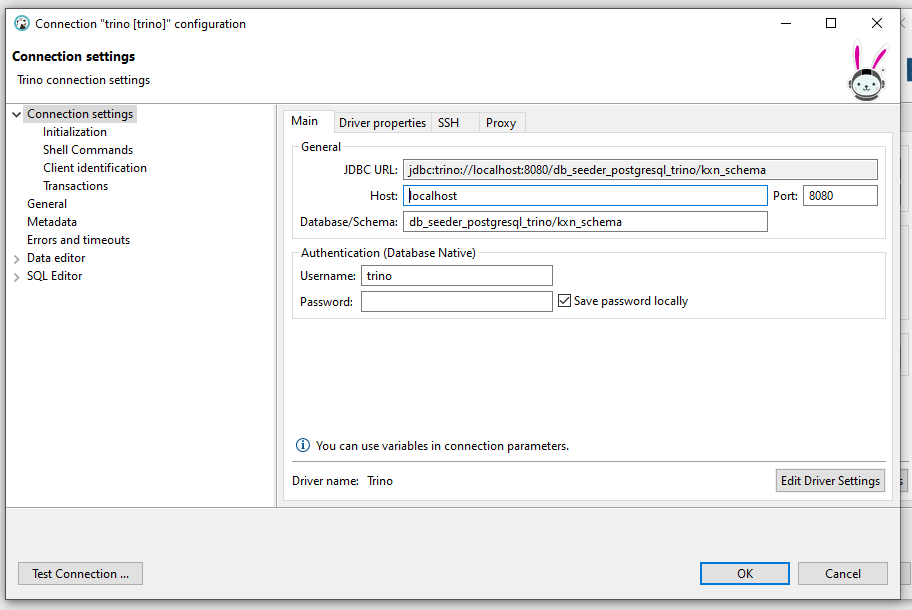

5.23 trino

- data types:

DBSeeder Type |

trino Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BLOB |

| CLOB | CLOB |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA

- CREATE TABLE

- CREATE USER - n/a

-

Docker image (latest):

- pull command:

docker pull trinodb/trino:403 - DockerHub

- pull command:

-

encoding: full support of UTF-8 (see here)

-

issue tracking: GitHub

-

JDBC driver (latest):

-

source code: GitHub

-

DBeaver database connection settings:

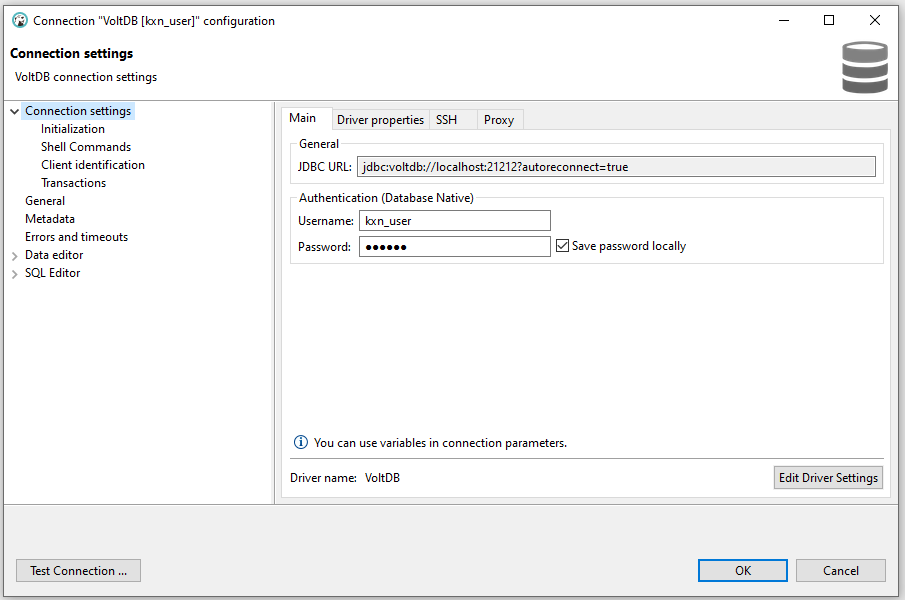

5.24 VoltDB

- data types:

DBSeeder Type |

VoltDB Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | VARBINARY(1048576) |

| CLOB | VARCHAR(1048576) |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

- CREATE DATABASE - n/a

- CREATE SCHEMA - n/a

- CREATE TABLE

- CREATE USER - n/a

-

Docker image (latest):

- pull command:

docker pull voltdb/voltdb-community:9.2.1 - DockerHub

- pull command:

-

issue tracking: Jira

-

JDBC driver (latest):

-

restrictions: no support of autoincrement, check constraints or foreign keys

-

source code: GitHub

-

DBeaver database connection settings:

5.25 YugabyteDB

- data types:

DBSeeder Type |

YugabyteDB Database Type |

|---|---|

| BIGINT | BIGINT |

| BLOB | BYTEA |

| CLOB | TEXT |

| TIMESTAMP | TIMESTAMP |

| VARCHAR | VARCHAR |

-

DDL syntax:

-

Docker image (latest):

- pull command:

docker pull yugabytedb/yugabyte:latest - DockerHub

- pull command:

-

encoding: see PostgreSQL

-

issue tracking: GitHub

-

JDBC driver (latest):

-

source code: GitHub

-

DBeaver database connection settings:

6. trino

trino can integrate the following DBMS, among others:

- MySQL via the MySQL Connector,

- Oracle via the Oracle Connector, and

- PostgreSQL via the PostgreSQL Connector.

- SQL Server via the SQL Server Connector,

DBSeeder makes it possible to use trino's JDBC driver and the corresponding connectors as an alternative to the JDBC drivers of the DBMS suppliers.

To use the trino JDBC driver, a trino server is required.

With the script db_seeder_trino_environment a trino server can be set up.

Since trino does not support the Windows operating system, a suitable Docker image is created for Windows.

For Linux, e.g. Ubuntu, the script can alternatively be used to perform a local installation of the trino server.